Performance Analysis of Instagram Paid Promotion on a Controlled Budget

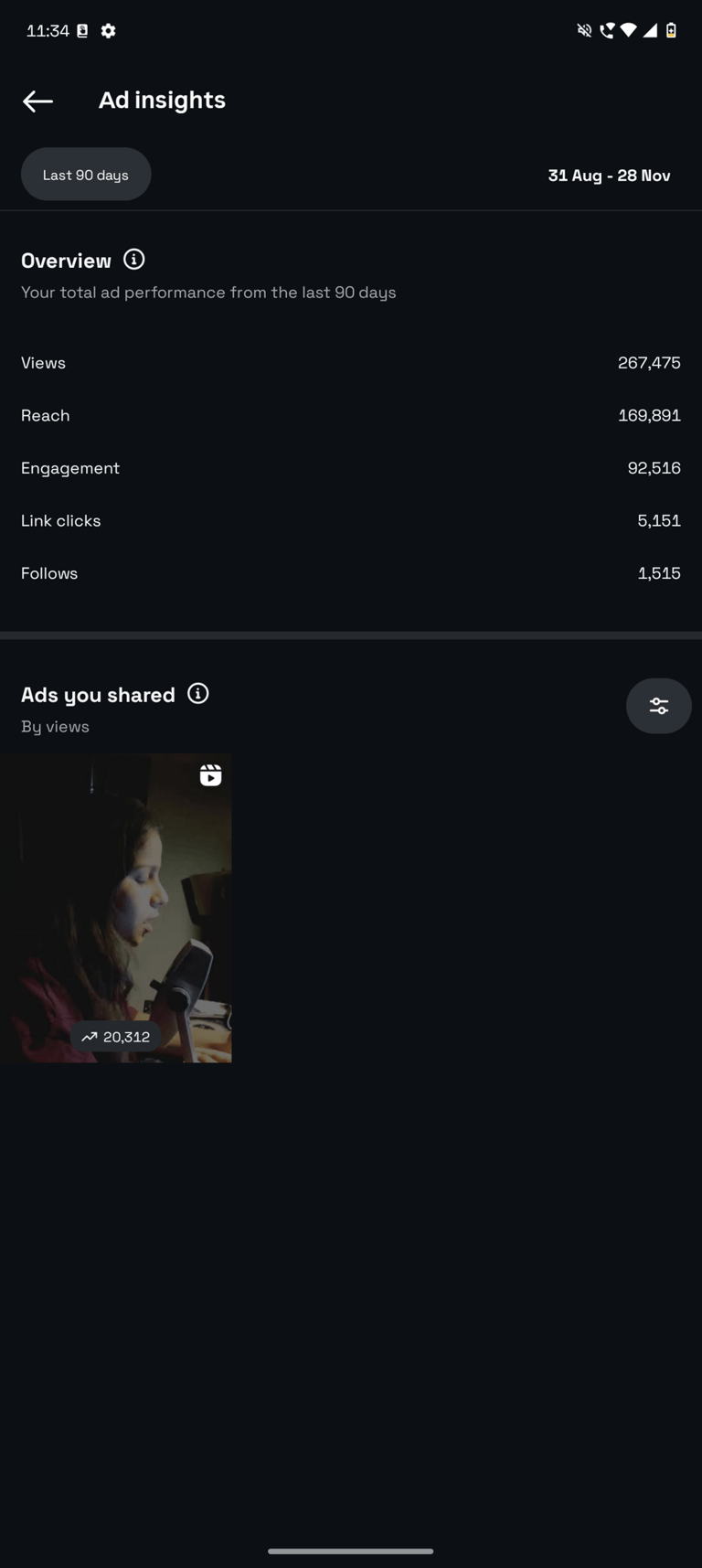

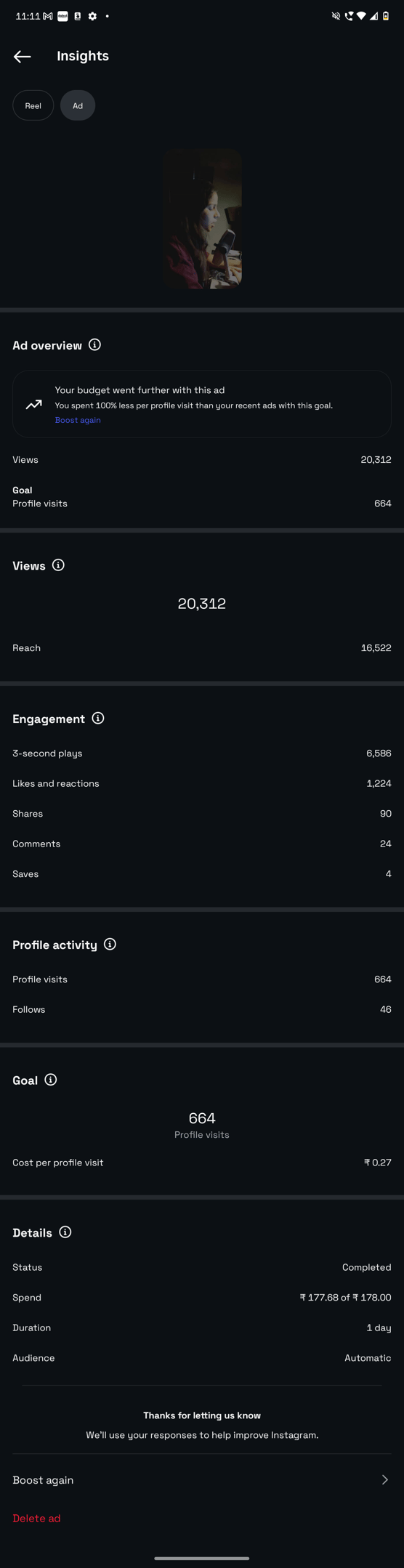

(Based on actual analytics from Instagram. Screenshots of the Insights dashboard and individual ad performance have been attached.)

Context

This case study reviews a 90-day period of paid promotion activity using controlled budgets to study how content performs once paid distribution is introduced. Rather than focusing on aggressive scaling, the intention was to observe user behavior at each stage and understand how exposure translated into engagement and profile activity.

The screenshots attached show the Insights overview as well as individual campaign cards from the ad system, which were used as the primary data source for analysis.

Account-Level Performance

According to the Insights screenshots attached:

Views: 267,475

Reach: 169,891

Interactions (likes, comments, saves): 92,516

Link clicks: 5,151

New followers: 1,515

The relationship between these metrics is important because it indicates that interaction grew in proportion to reach rather than flattening out. This suggests that distribution was not limited to passive exposure and that a significant number of users chose to engage with the content after seeing it.

Budget Conditions

As visible in the individual campaign screenshots, promotions were run with budgets between ₹178 and ₹521. This range enabled efficient testing while limiting risk and maintaining tight feedback cycles on performance.

Limited budgets provide clear signals. Content either generates response or quickly plateaus. This created an environment where content performance could be evaluated without heavy influence from spend.

Campaign Review

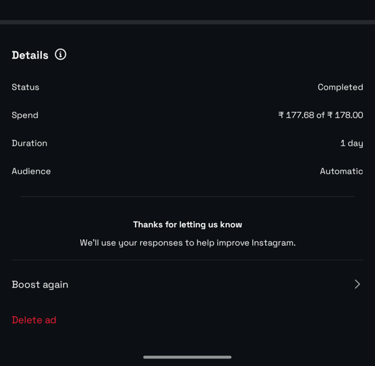

Campaign A (₹178, 1 day)

The ad card screenshot shows:

Reach: 14,522

Views: 20,312

Profile visits: 664

Follows: 46

Cost per profile visit: ₹0.27

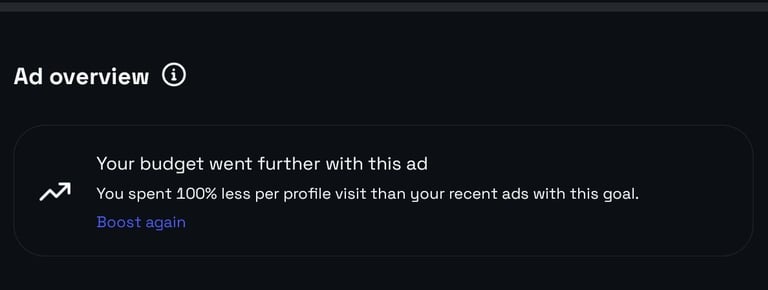

In this campaign, the platform itself displayed a system notification indicating that the cost per profile visit had decreased by 100% compared to similar ads.

This notification was generated automatically by Instagram’s ad delivery system and reflects a relative performance comparison against other ads within the same category and auction environment.

Rather than being a manual claim, this was a platform-issued signal that the campaign achieved stronger efficiency than comparable promotions running at that time.

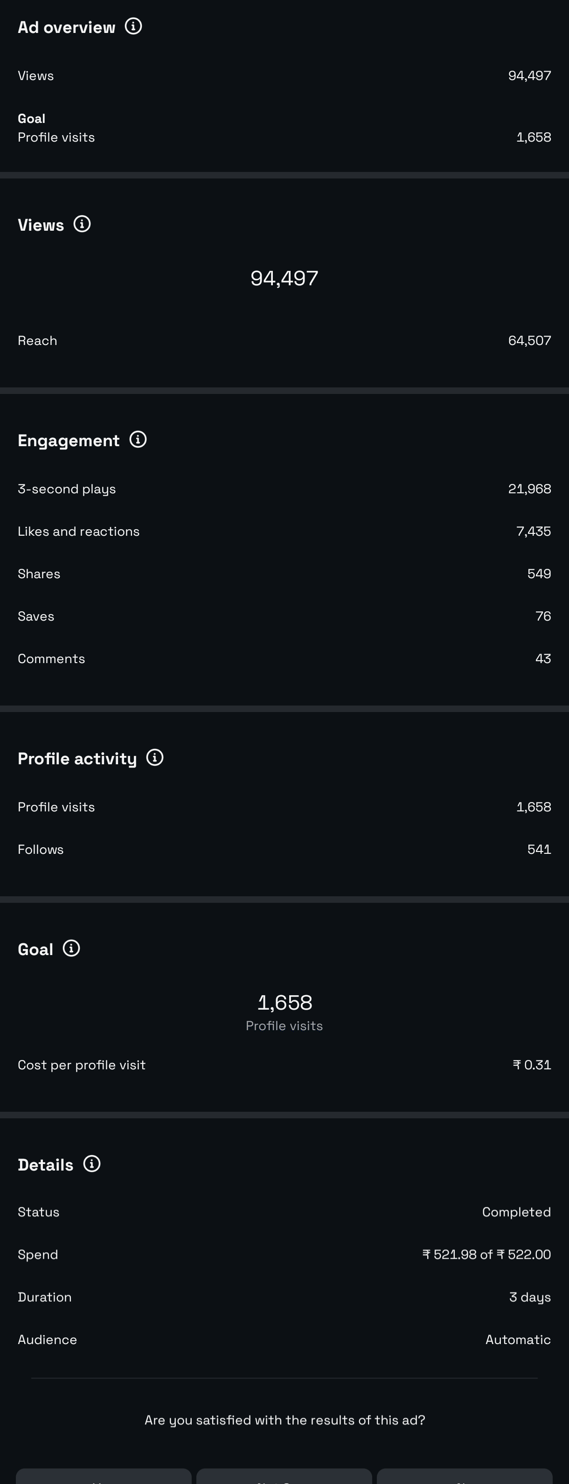

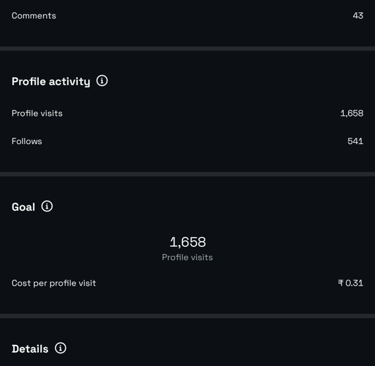

Campaign B (₹521, 3 days)

From the second campaign screenshot:

Reach: 84,507

Views: 94,497

Profile visits: 1,658

Follows: 541

Cost per profile visit: ₹0.31

Despite a higher budget, the cost per visit remained relatively stable, indicating that distribution expanded without a noticeable decline in performance efficiency.

Behavioral Interpretation

Several consistent patterns can be seen across the screenshots:

Profile visits rise in line with reach, suggesting viewers were not simply scrolling past.

Follows increase proportionally with visits, indicating that many visitors perceived enough relevance to continue engagement.

Cost per visit remained within a narrow range despite scaling, which shows relative consistency in the quality of traffic.

Short-form video formats (Reels) were responsible for most of the reach and interaction, reinforcing the effectiveness of video content for discovery.

Content Selection Method

Screenshots also reflect that promotion was not applied uniformly. Content chosen for promotion typically displayed early organic activity before receiving spend.

Selection criteria included:

initial engagement velocity

saves and shares

completion rates

comment frequency

Paid distribution was used to amplify content that had already shown signs of user interest rather than attempting to force exposure on low-response content.

Platform Behavior

Based on the delivery pattern visible in the screenshot data, the platform adjusted distribution in response to user behavior such as watch-time and interaction frequency. As engagement remained stable, delivery expanded without cost increases, which aligns with typical ranking behavior within the platform’s recommendation system.

Audience & Interest Structuring

Along with creative testing, I also worked on the audience layer instead of letting the platform decide everything. I built specific audience categories based on interests, behaviour patterns, and content themes rather than using only broad targeting. Interests were added intentionally, not randomly, to align the ad delivery with the type of users most likely to explore the profile and engage further. I also observed how different interest clusters reacted differently to the same content, which helped narrow targeting over time. This allowed distribution to improve in quality even when the budget remained small, because ads were being shown to users whose activity already indicated relevance instead of being pushed blindly to wide groups.

Limitations

This analysis is based on platform-provided analytics visible in the attached screenshots and does not include external attribution or revenue tracking. The findings therefore relate to acquisition performance at the engagement and profile conversion level rather than downstream monetization.

Conclusion

This case demonstrates how paid distribution on Instagram can be used as a performance learning environment rather than only as a traffic tool. The attached screenshots provide context for how interaction, profile activity, and cost efficiency behaved under real usage conditions.a

The primary learning is that reliable acquisition performance emerges from:

promoting content with prior organic response

observing behavioral response rather than chasing reach

scaling cautiously while watching efficiency

relying on engagement, not spend, as the main growth signal